subscribe via RSS

-

We've been acquired by Fastly

I’m excited to share that Fanout is now part of Fastly! Over the coming months we’ll be rolling out our realtime/push capabilities onto their global network.

See also Fastly’s announcement.

-

A cloud-native platform for push APIs

Today we are excited to announce Fanout Platform!

Fanout Platform is infrastructure software for building HTTP streaming and WebSocket APIs. It is comprised of our open source project, Pushpin, along with special add-ons for scaling. Helm charts are available for installation on Kubernetes.

Here’s a short video that shows how easy it is to upgrade from Pushpin to Fanout Platform:

Read on to learn about why we are doing this, and how it works.

-

Rewriting Pushpin's connection manager in Rust

For over 7 years, Pushpin used Mongrel2 for managing HTTP and WebSocket client connections. Mongrel2 served us well during that time, requiring little maintenance. However, we are now in the process of replacing it with a new project, Condure. In this article we’ll discuss how and why Condure was developed.

-

WebSockets with AWS Lambda

Fanout Cloud handles long-lived connections, such as HTTP streaming and WebSocket connections, on behalf of API backends. For projects that need to push data at scale, this can be a smart architecture. It also happens to be handy with function-as-a-service backends, such as AWS Lambda, which are not designed to handle long-lived connections on their own. By combining Fanout Cloud and Lambda, you can build serverless realtime applications.

Of course, Lambda can integrate with services such as AWS IoT to achieve a similar effect. The difference with Fanout Cloud is that it works at a lower level, giving you access to raw protocol elements. For example, Fanout Cloud enables you to build a Lambda-powered API that supports plain WebSockets, which is not possible with any other service.

To make integration easy, we’ve introduced FaaS libraries for Node.js and Python. Read on to learn how it all works.

-

High scalability with Fanout and Fastly

Fanout Cloud is for high scale data push. Fastly is for high scale data pull. Many realtime applications need to work with data that is both pushed and pulled, and thus can benefit from using both of these systems in the same application. Fanout and Fastly can even be connected together!

Using Fanout and Fastly in the same application, independently, is pretty straightforward. For example, at initialization time, past content could be retrieved from Fastly, and Fanout Cloud could provide future pushed updates. What does it mean to connect the two systems together though? Read on to find out.

-

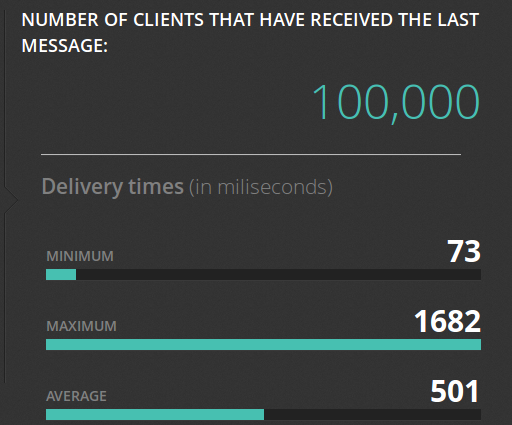

Pushing to 100,000 API clients simultaneously

Earlier this year we announced the open source Pushpin project, a server component that makes it easy to scale out realtime HTTP and WebSocket APIs. Just what kind of scale are we talking about though? To demonstrate, we put together some code that pushes a truckload of data through a cluster of Pushpin instances. Here’s the output of its dashboard after a successful run:

Before getting into the details of how we did this, let’s first establish some goals:

- We want to scale an arbitrary realtime API. This API, from the perspective of a connecting client, shouldn’t need to be in any way specific to the components we are using to scale it.

- Ideally, we want to scale out the number of delivery servers but not the number of application servers. That is, we should be able to massively amplify the output of a modest realtime source.

- We want to push to all recipients simultaneously and we want the deliveries to complete in about 1 second. We’ll shoot for 100,000 recipients.

To be clear, sending data to 100K clients in the same instant is a huge level of traffic. Disqus recently posted that they serve 45K requests per second. If, using some very rough math, we say that a realtime push is about as heavy as half of a request, then our demonstration requires the same bandwidth as the entire Disqus network, if only for one second. This is in contrast to benchmarks that measure “connected” clients, such as the Tigase XMPP server’s 500K single-machine benchmark, where the clients participate conservatively over an extended period of time. Benchmarks like these are impressive in their own right, just be aware that they are a different kind of demonstration.

• filed under

• filed under