Fun with Zurl, the HTTP/WebSocket client daemon

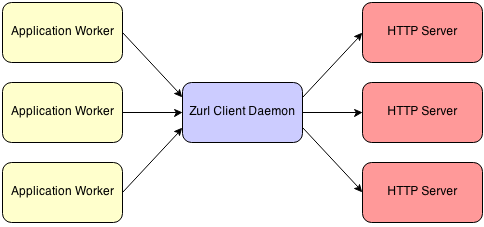

Zurl is a gateway that converts between ZeroMQ messages and outbound HTTP requests or WebSocket connections. It gives you powerful access to these protocols from within a message-oriented architecture. The following diagram shows how Zurl may fit in among the entities involved:

Any number of workers can contact any number of HTTP or WebSocket servers, and Zurl will perform conversions to/from ZeroMQ messages as necessary. It uses libcurl under the hood.

The format of the messages exchanged between workers and Zurl is described by the ZHTTP protocol. ZHTTP is an abstraction of HTTP using JSON-formatted or TNetString-formatted messages. The protocol makes it easy to work with HTTP at a high level, without needing to worry about details such as persistent connections or chunking. Zurl takes care of those things for you when gatewaying to the servers.

For example, here’s some Python code to perform a GET request:

import uuid

import json

import zmq

ctx = zmq.Context()

sock = ctx.socket(zmq.REQ)

sock.connect('ipc:///tmp/zurl-req')

# send a GET request

req = dict()

req['id'] = str(uuid.uuid4())

req['method'] = 'GET'

req['uri'] = 'http://google.com/'

sock.send('J' + json.dumps(req))

# receive the response

resp = json.loads(sock.recv()[1:])

if 'type' in resp and resp['type'] == 'error':

print 'error: %s' % resp['condition']

else:

print 'code=%d reason=[%s]' % (resp['code'], resp['reason'])

for h in resp['headers']:

print '%s: %s' % (h[0], h[1])

print '\n%s' % resp.get('body', '')The above code sends a message to Zurl containing the URL to request against, and then waits to receive a message containing the entire response to that request. Status code, body, etc are all fields within the response message.

If you’re not already building message-based applications, then you might wonder why this approach is useful. After all, every language/platform by now has decent HTTP client capability. The value of introducing a separate component like this primarily comes down to philosophy and taste. In messaging architectures, it’s ideal to have many small components each handling discrete tasks. Therefore, it is reasonable to consider isolating HTTP/WebSocket protocol logic into its own component.

Below, we’ll go over some interesting things you can do with Zurl. Users of messaging architectures (particularly ZeroMQ) should appreciate them right away. Those not developing with messaging will hopefully be inspired.

- Make an HTTP request without blocking your pipeline

- Fire-and-forget a Webhook call

- Receiver is a different worker than the sender

- Shared persistent connections

- Relay a stream to multiple receivers

- Connections transcend worker lifetime

Make an HTTP request without blocking your pipeline

If you write ZeroMQ modules in a blocking style, as opposed to event-driven style, then you risk blocking your module’s execution whenever you attempt to perform an HTTP request using normal library functions. For example, suppose you’ve got a worker written in Python that calls urllib2.urlopen() in the middle of its message handling to access some remote REST API.

def worker():

in_sock = ctx.socket(zmq.PULL)

in_sock.connect('ipc://inqueue')

out_sock = ctx.socket(zmq.PUSH)

out_sock.connect('ipc://outqueue')

while True:

m = json.loads(in_sock.recv())

mid = m['id']

# construct uri and POST data based on message

uri = ...

data = ...

try:

urllib2.urlopen(uri, data).read()

m = { 'id': mid, 'success': True }

except:

m = { 'id': mid, 'success': False }

# push success/fail

out_sock.send(json.dumps(m))Blocking on remote I/O like this is very wasteful, and supporting N concurrent outbound requests would require N worker instances. Instead, let’s split the code into two workers and insert Zurl into the chain:

def in_worker():

in_sock = ctx.socket(zmq.PULL)

in_sock.connect('ipc://inqueue')

zurl_out_sock = ctx.socket(zmq.PUSH)

zurl_out_sock.connect('ipc:///tmp/zurl-in')

while True:

m = json.loads(in_sock.recv())

mid = m['id']

# construct uri and POST data based on message

uri = ...

data = ...

zreq = dict()

zreq['from'] = 'worker'

zreq['id'] = str(uuid.uuid4())

zreq['method'] = 'POST'

zreq['uri'] = uri

zreq['body'] = data

zreq['user-data'] = mid

zurl_out_sock.send('J' + json.dumps(zreq))

def out_worker():

zurl_in_sock = ctx.socket(zmq.SUB)

# subscriptions are by substring. trailing space for exact match

zurl_in_sock.setsockopt(zmq.SUBSCRIBE, 'worker ')

zurl_in_sock.connect('ipc:///tmp/zurl-out')

out_sock = ctx.socket(zmq.PUSH)

out_sock.connect('ipc://outqueue')

while True:

zresp = json.loads(zurl_in_sock.recv()[8:])

if zresp.get('code') == 200:

m = { 'id': zresp['user-data'], 'success': True }

else:

m = { 'id': zresp['user-data'], 'success': False }

# push success/fail

out_sock.send(json.dumps(m))Since Zurl itself is event-driven, this message flow provides maximum efficiency for handling remote I/O while still being able to write the workers in a blocking style. There is also no more need to spawn N workers to handle N concurrent outbound requests. With only one instance of in_worker and one instance of out_worker (two workers total), this code supports thousands of concurrent requests.

Fire-and-forget a Webhook call

HTTP callbacks (aka Webhooks) are used to notify or provide data to a remote entity. In many cases, the responses to such calls are not terribly interesting. Ever wish you could just “fire-and-forget” an outbound HTTP request? Zurl lets you do exactly that:

zurl_out_sock = ctx.socket(zmq.PUSH)

zurl_out_sock.connect('ipc:///tmp/zurl-in')

zreq = dict()

zreq['id'] = str(uuid.uuid4())

zreq['method'] = 'POST'

zreq['uri'] = uri

zreq['body'] = data

zurl_out_sock.send('J' + json.dumps(zreq))The above code will execute super fast, as all it does is asynchronously trigger the HTTP request within Zurl. There will be no waiting around for the HTTP response. Got some Webhook invocation code that uses a blocking HTTP call? Get rid of that blocking call and send a message to Zurl instead. Instant efficiency.

Also, using Zurl for Webhooks provides extra security, because it can be configured to prevent evil callback URLs from being able to hit your internal network. This is a harder problem to solve than you might think.

Receiver is a different worker than the sender

In an earlier example, we demonstrated that a worker sending a request need not be the same worker that processes the response. However, this ability goes beyond simply splitting a single logical operation into two pieces of code. The send and receive workers could just as well have very little to do with each other, and share little-to-no state. Further, the handlers don’t need to be running within the same process nor be running on the same machine. There doesn’t even need to be a 1:1 relationship between the number of sending and receiving handlers.

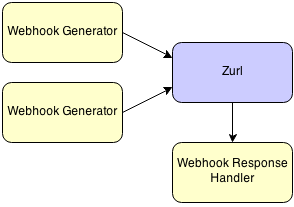

For example, suppose you really do want to check the responses of your Webhook calls so that you can unsubscribe any URLs that respond with errors. You could design your architecture such that there are workers that generate Webhook calls and don’t care about responses, and workers that only care about Webhook responses and nothing else:

With this approach, the generators throw requests at Zurl, and the response handler deletes subscriptions from the database as necessary. The enqueuing of requests and the handling of errors could be viewed as distinct logical operations within this kind of system. Look at it this way: if the response handler is disabled, then subscription cleanup would not be performed, but Webhooks would still be delivered.

Shared persistent connections

Zurl supports persistent connections. If a worker makes a request to a host and then a few seconds later another worker makes a request to the same host, then Zurl will reuse the connection from the original request. This is especially useful for HTTPS connections, which have a lot of overhead to become established.

This can be confirmed with Zurl’s get.py program:

$ time python tools/get.py https://www.google.com/

[...]

real 0m0.233s

user 0m0.040s

sys 0m0.004s

$ time python tools/get.py https://www.google.com/

[...]

real 0m0.133s

user 0m0.028s

sys 0m0.012sAs you can see, the subsequent request finishes almost twice as fast. Of course, an ordinary HTTP proxy can provide persistent connections as well, so this is not a feature exclusive to Zurl. Still, it’s pretty cool.

Relay a stream to multiple receivers

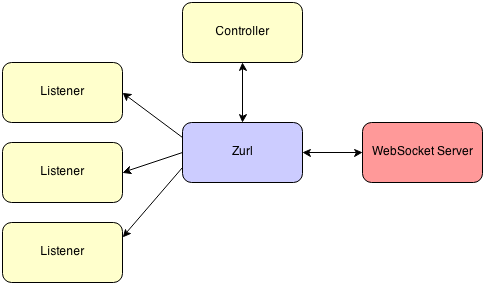

Here’s where things get wild. Zurl’s split interface uses PULL and ROUTER sockets to receive data and a PUB socket to send data. Because PUB is used for sending, this means that more than one worker is able to receive response data for the same HTTP request or WebSocket connection. Imagine this arrangement:

The idea here is to have a controlling worker that manages a connection, and then one or more listeners that receive data from that connection. For example, we could use this design to subscribe many listeners to a single upstream WAMP PubSub channel. Below is some possible code for the controller:

import uuid

import json

import time

import zmq

def get_data_message(in_sock):

data = ''

while True:

zresp = json.loads(in_sock.recv()[len(instance_id) + 1:])

from = zresp['from']

if 'type' in zresp:

continue # not data

if 'body' in zresp:

data += zresp['body']

if not zresp.get('more'):

# fin bit not set, we're done

break

return zfrom, data

channel = 'http://example.com/channels/foo'

instance_id = channel

out_sock = ctx.socket(zmq.PUSH)

out_sock.connect('ipc:///tmp/zurl-in')

out_stream_sock = ctx.socket(zmq.ROUTER)

out_stream_sock.connect('ipc:///tmp/zurl-in-stream')

in_sock = ctx.socket(zmq.SUB)

in_sock.setsockopt(zmq.SUBSCRIBE, instance_id + ' ')

in_sock.connect('ipc:///tmp/zurl-out')

# ensure zurl subscription

time.sleep(0.5)

rid = str(uuid.uuid4())

outseq = 0

# send handshake

zreq = dict()

zreq['from'] = instance_id

zreq['id'] = rid

zreq['seq'] = outseq

zreq['uri'] = 'ws://example.com/wamp'

out_sock.send('J' + json.dumps(zreq))

outseq += 1

# receive handshake

zfrom, data = get_data_message(in_sock)

# receive WELCOME message

zfrom, data = get_data_message(in_sock)

m = json.loads(data)

assert(m[0] == 0)

# subscribe to 'foo' channel

zreq = dict()

zreq['from'] = instance_id

zreq['id'] = rid

zreq['seq'] = outseq

zreq['body'] = json.dumps([5, channel])

out_stream_sock.send([zfrom, '', 'J' + json.dumps(zreq)])

outseq += 1The above code will create a subscription with the WAMP service. Please note that for brevity the above code does not attempt to maintain the session nor handle non-data ZHTTP message types. This is fine for the purposes of our example, but a real controller implementation would need to take care of those things, too.

To fan out the WAMP events to a set of listeners, fire up one or more instances of the following code:

channel = 'http://example.com/channels/foo'

sock = ctx.socket(zmq.SUB)

sock.setsockopt(zmq.SUBSCRIBE, channel + ' ')

sock.connect('ipc:///tmp/zurl-out')

while True:

zfrom, data = get_data_message(sock)

if len(data) == 0:

continue

m = json.loads(data)

if m[0] == 8: # TYPE_ID_EVENT

print m[2]Whenever a new pubsub event arrives, it will be sent to all listeners. Pubsub of pubsub!

The same approach could be used to process a streaming HTTP response as well. And, of course, the listeners could be running on different machines than the controller.

Connections transcend worker lifetime

Zurl does not care about the existence or nonexistence of ZeroMQ connections. It only cares about messages. This means that a worker can be restarted without breaking a corresponding HTTP request or WebSocket connection. Similarly, a worker can be started after a connection already exists and then begin working with it.

For an example of how to take advantage of this, consider again the multi-listener arrangement described in the previous section. Individual listeners can be restarted, or new listeners can be added, and they will be able to “tune in” to the existing WebSocket connection without any disruption to that connection.

Conclusion

Did you ever think an HTTP client would be taken to this level? Is Zurl an act of brilliance or overengineering at its finest? Does anyone really make outbound WebSocket connections from a server? If you are not developing with messaging or ZeroMQ yet, are you itching to try now? In any case, there is plenty of fun to be had with Zurl.

Recent posts

-

We've been acquired by Fastly

-

A cloud-native platform for push APIs

-

Vercel and WebSockets

-

Rewriting Pushpin's connection manager in Rust

-

Let's Encrypt for custom domains

• filed under

• filed under